What to do if you read a Public Health COVID report in detail, and learn that what is being presented to the public isn’t what the actual numbers indicate?

What I did, was take my concerns to the Board of Health public meeting. While the 5 minutes they allocated wasn’t enough to cover the details, there wasn’t even any expression of concern about the introductory explanation of the situation I presented to them

This post will cover the details of what is in the special report by Ottawa Public Health on COVID in schools, how they don’t fit with a lot of the conclusions.

A quick summary of the analysis contained in this post – there are four aspects of the OPH data analysis to indicate the 15% of school cases they attribute to in-school spread, is a significant undercount:

- No systemic attempt to find asymptomatic cases – and youth are recognized to have higher asymptomatic rates than other ages;

- Lack of knowledge on how effective the procedure to identify school outbreaks actually was;

- A change in testing guidelines one month into the school year skewed the case counts – and were it not for Ottawa’s unique wastewater levels measurements, we wouldn’t know how much of a skew this was;

- On top of all the above, OPH simply made the assumption that all school cases not traceable to another infected person, must have been contracted somewhere other than in school.

I also explore comments made by the local Medical Officer of Health, Dr. Vera Etches, downplaying the significance of airborne transmission.

Disclaimer: In their published report, OPH does include disclaimers to somewhat cover three of the four points above . However, these disclaimers disappear from most of the simplified public messaging and media reports, and regardless, I think they understate the scope of the distortion.

In my five minute delegation (presentation) to the City of Ottawa Board of Health meeting April 19, I spoke first to the need for prioritizing vaccines to essential workers, and then about workplace safety in general and the at-the-time-upcoming April 28 Day of Mourning for work-related deaths and injuries.

* See the footnote section of this piece for more details and links from the meeting itself.

My analysis is from the OPH report published in February – Special Focus: COVID-19 in Schools – that covered the first three months of the 2020-2021 school year, i.e. September through November, 2020. There is ongoing school and community data posted on the OPH website, but it doesn’t contain some of the contextual details and comprehensive perspective compiled into the report.

The report stated that 85% of COVID cases in schools (inclusive of both students and staff) were contracted outside of school environments.

Its specific numbers were:

- 888 tested-positive COVID cases were identified in school attendees;

- 11,376 school attendees had to stop attending school and self-isolate for two weeks because of in-school contact with a positive case (it was unclear how many had to do this multiple times); and,

- 134 school attendees who tested positive for COVID were identified as having contracted the case from someone else in their school.

Even if my analysis that follows, is again not picked up on by public officials or media, I do hope some people will take note. Particularly because of its relevance, I hope some teachers and students will take an interest, and that maybe it can even form the basis for a math lesson!?!

* I formerly taught math at some schools in both the OCDSB and OCCSB, mostly intern or occasional teacher positions, and through personal tutoring. I have learned the value of pedagogy that includes personally meaningful content!

—

Here are the four main sections examining the data:

—

DATA PROBLEM – Asymptomatic cases?

Almost all data on COVID is based on the results of testing results – and the OPH school report is not an exception.

But not everyone who has COVID gets tested, so those cases aren’t included in any data.

Specifically of relevance to the schools situation, ‘asymptomatic’ people – who have COVID but don’t have symptoms – often don’t get tested and don’t know they are infected. And a lot of evidence indicates children and youth have higher proportion of asymptomatic infection than the general population.

This would mean the counts that OPH has of COVID cases in schools is an undercount. The question to ask then is, how much of an undercount?

No systematic testing of children (or anyone) in Ottawa has been undertaken at any point, to identify all the cases that would otherwise be undetected. (The rotating ‘rapid testing’ clinics on weekends targeted to specific school-related populations, that began at the end of January, are a very different thing than systematic testing.)

OPH’s data on cases in schools, is almost* all people who went and got tested on their own initiative, and received a positive test result.

*: The semi-exceptions, explained in the next section, were amongst the high-risk school contact cohort of anyone learned to have tested positive.

OPH’s report does include a disclaimer, “Considering the high rate of asymptomatic infection, particularly among young people[6], it is possible that only a portion of cases in schools were diagnosed.” and then goes on to indicate that “Approximately one quarter of source cases [of outbreaks] … were asymptomatic.”

Their [6] footnote is to an Ontario Public Health report, that found children to be asymptomatic approximately twice as often as adults (26.1% vs 13.0%) and slightly more than seniors (26.1% vs 19.7%). But again, even this data was non-random – it is only amongst those who got tested and were positive, and the Ontario Public Health report alluded to their own data bias, stating “Children are more likely to have mild or asymptomatic infection than adults and may therefore not have presented for care/testing[2]“.

The only way to achieve an accurate measurement of how many children with COVID are asymptomatic, is to do randomized or systemic testing – but similar to the Ontario report data, most of the research cited on asymptomatic rates is based on results of existing COVID testing, that is, of people who ‘self-select’ to be tested because they have criteria or reasons to decide to get tested.

Sidenote: this concept is explained well in the article”We Can’t Get a Handle on the Coronavirus Pandemic Without Random Testing” by Emily Oster:

Most people agree that random or universal testing is the best approach. But it’s also very hard to execute. Identifying a random sample of people and testing them is much, much more challenging than testing what we’d call a “convenience sample”—people whom it is easy to find and access. …

Given how hard this is, you might be tempted to think: Well, some data is better than no data. I’ll do something easier—maybe set up a mobile testing site and encourage people to come—and at least I’ll learn *something*.

This thinking is really problematic. Put simply: If we do not understand the biases in our sampling, the resulting data is garbage. …

The term ‘bias’ is a ‘value-neutral’ description more than a perjorative, when used in statistical language.

I’m not sure what a legitimate measurement is of what percent of children and youth with COVID are asymptomatic, but I think it is higher than the 26.1% that Ottawa Public Health cites. One research article, using statistical models rather than random testing, estimated it might be up to 79%.

The difference between a 26% of cases being asymptomatic (which is around 1 in 4, or 1 asymptomatic for every 3 symptomatic) versus 79% (which is roughly 8 in 10, or 4 asymptomatic for every 1 symptomatic) is 12-fold; which is to say, *IF* the 80% is correct versus the ~ 25% that were identified, that would mean the existing count of 888 would actually be higher than 13000 cases!

(STUDENT NOTE – See if you can figure out the calculation to get 13000: where was the OPH data to indicate how many cases of the 888 were asymptomatic?)

That is a hypothetical number, but illustrates the potential magnitude of distortion .

—

Measuring outbreaks in Ottawa schools

Given there has been no systematic testing, OPH’s process for identifying or measuring outbreaks is important to understand. It is the only way they identify any cases beyond the ones that got tested on their own initiative, just like anyone else who goes to the testing clinics in the city.

OPH defined an outbreak as two or more cases amongst a group of students/teacher(s) in close contact.

(I assume this ‘group’ can generally be interpreted as a classroom, since the report said the average size of a close-contact group was 20 people.)

Finding an outbreak starts when one person in a school has a positive test result – which again, is only if when they have a reason to think they need to be tested and then they also follow through with that and get tested.

Then, the others at the school in their close-contact / high-risk group (on average, 20 people) are asked to quarantine at home and get tested.

However, getting tested was only a recommendation – and OPH did not keep track of how many of the people in each close-contact group actually did get tested, they only know how many positive test results there were.

Note that the city of Montreal’s policy to encourage testing for student case close contacts, was that households could stop quarantining as soon as their child got a negative test for a possible infection via school contact; whereas OPH’s policy as articulated in the report, was that the child needed to quarantine for two weeks regardless of test result (and, it was only early the next year that Ontario started to require other household members to also quarantine when a child was).

If on average, 10 of the 20 went and got tested, a lot less outbreaks would be discovered than if 18 of the 20 did. But this is a big unknown.

Also to note is OPH’s definition – as stated in the report – of, “A high-risk contact in the school is someone who was in close contact, usually within 2 metres for longer than 15 minutes, without adequate personal protective equipment.” I note this criteria because, to me this definition ignores the science about airborne transmission (see ‘airborne’ section further down), and thus many of the potential exposures may not have even been identified as high-risk and then recommended for quarantine and testing.

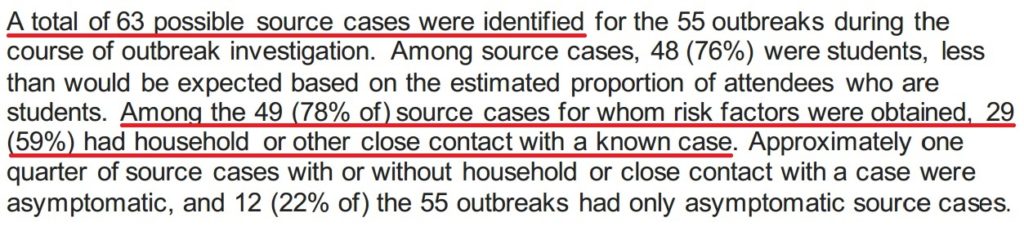

OPH reported having identified 55 outbreaks in school, with a total of 63 suspected ‘source cases’. This sounds like there were eight – or possibly up to 12 – cases where the original case wasn’t the first case identified. This could be interpreted to say something like 14% – 22% of outbreak source cases weren’t caught initially.

*Eight, since there are that many more suspected source cases than outbreaks (63 minus 55); or up to 12, since there were 12 identified outbreaks where all suspected source case(s) was/were asymptomatic.

That may all sound a bit complicated. If so, stick with the central idea: that is, they only found outbreaks if at least one infected person decided to get themselves tested with no special prompting* from the school, and then someone in their contact group also got tested and was positive. And all measurement of in-school spread was based on that process.

(*: Though one could consider the recommended daily symptom screening as a basis to encourage testing)

The total measured count of cases resulting from outbreaks was 134, out of the total 888 known cases in all the school populations, which calculates to 15%.

OPH reports this as the full amount of transmission that took place within schools, while I would put forward that it is much more accurate to say that at least this per cent of the identified cases were spread in schools. (See section 4 for more on this point).

—

Outbreaks went down because … of a change in testing guidelines?

Now to add on to the understanding of how outbreaks are discovered, and how there may be outbreaks that don’t get discovered…

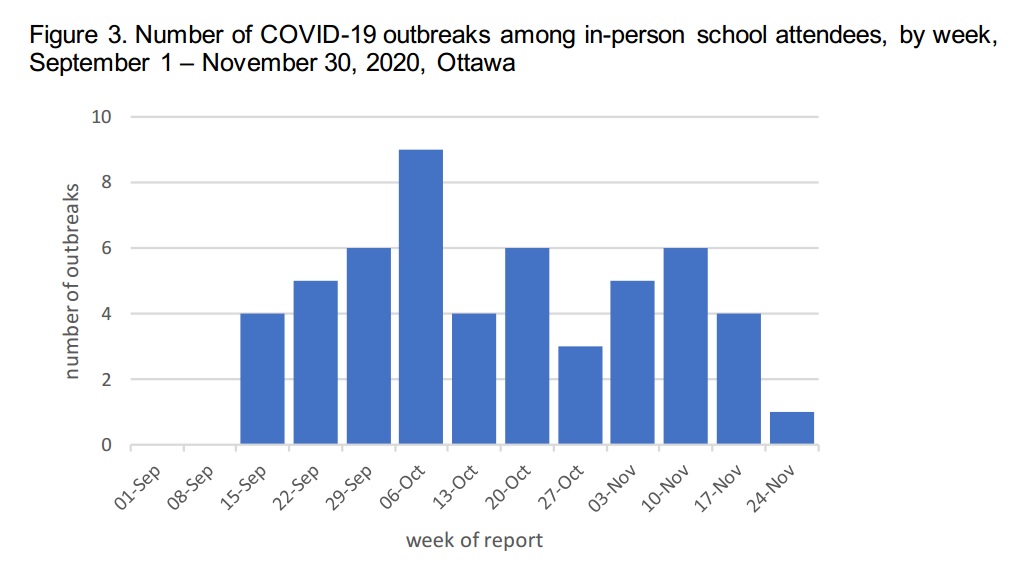

From the OPH report, here is the timeline graph of the number of discovered outbreaks – note the trend over time.

The trend over time – a steady rise in outbreaks from the start of the school year until the beginning of October, when the number drops in half and then doesn’t ever get as high again – is noteworthy because something changed at the end of September/ start of October.

The report described it like this:

… provincial testing guidelines for school attendance changed in late September such that children with only one non-specific symptom (e.g., sore throat, runny nose, congestion, headache, nausea, fatigue) could return to school after 24 hours if their symptoms were improving and were not required to be tested prior to return.

This is similar to the asymptomatic infections issue – but, in this case, in Ottawa we are in a unique situation that provides us data to see a sense of how much data distortion it caused (wait for it…)

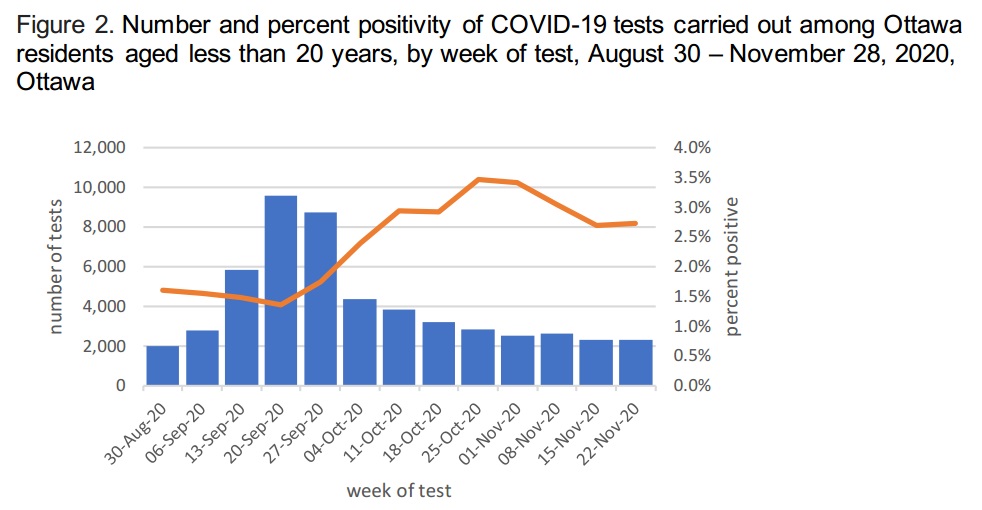

This next graph isn’t the unique part, but it contributes to it. It is also from the OPH report: the total number of tests per week for ages under 20, to see how many less tests the new guidelines resulted in. It also contains an orange line representing what per cent of tests were positive.

The number of youth tests peaked the week of September 22, with 9572 tests performed, and two weeks later had dropped to less than half that number, at 4381 tests, and decreased more after that.

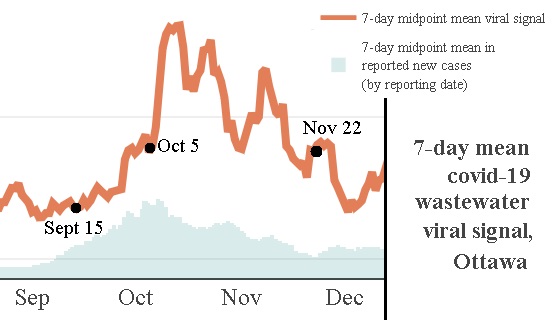

The unique-to-Ottawa part is that there is daily wastewater monitoring of COVID levels. The graph’s orange line below indicates a more accurate measurement of how many people had COVID, that the number of positive tests did (the light blue part of the same graph).

The peak of identified outbreaks in schools, occurred just before the actual amount of the virus locally approximately doubled (taking into account the lag between the wastewater levels – that measures real-time volume of active virus – and the number of positive tests).

The overall, all-ages number of measured positive tests locally – the blue measurement on the graph – also peaked around then.

Then there was a large break from that point forward with the wastewater-levels measure – look at the ‘gap’, the amount of white space – between the two measurements.

Both the measured school outbreaks, and overall number of local positive tests, did afterwards continue to roughly parallel the wastewater measurements, but at a much reduced scale. This evidence demonstrates how many fewer positive test results were coming from the changed testing guidelines than before (and to note, ‘before’ wasn’t necessarily identifying 100% of people with COVID either).

The logical interpretation of this means that, after the change in testing guidelines, a greater percentage of the ‘actual’ outbreaks in schools were not being detected – not that the number of school outbreaks declined while COVID spread soared in the community. Only the measured number of outbreaks declined.

The unique ability to use the monitoring of wastewater COVID levels allows us to have some numerical measure of this discrepancy, rather than – as in the other sections here – only having an understanding of reasons that the case counts are lower than the actual levels, but not being able to confirm it.

You can see how this ‘drop’ or ‘peak’ in outbreaks was reported, unqualified, by some of the local news media.

It is also important to note how there may have been a compounding / combining effect for the changed testing on the measurement of outbreaks.

(Compounding, as in ‘compound interest’ type of effect; or, combining, as in the mathematical field ‘combinatorics’).

Not only would less outbreaks be discovered because the originating ‘source’ case didn’t get tested in the first place, and thus the group they were part of, didn’t get notice to quarantine and get tested – but in the groups that did have an initial positive test case, triggering the recommendation to quarantine and get tested, it is a likely assumption that a smaller percentage of those groups would go and get tested because of the stricter testing criteria, and thus less likely that a second case, and outbreak, would be discovered.

The simple math example is if you have two things that are both half of what they were, and multiply them together, you get a quarter of the original result. So the number of detected outbreaks may have decreased in greater proportion than the number of detected individual cases decreased, and thus the count of ‘in-school spread’ cases would be a reduced percentage of the count of total school cases.

I think it is a likely assumption, that a smaller proportion of close contacts would decide to get tested under the new testing guidelines, but we don’t know for sure.

An assumption that I think is less likely, is the fourth aspect of the report’s data that I think is a problem.

—

Can you report assumptions as conclustions?

All of the above analysis is about problems in measurement, that likely contributed to getting lower-than-actual numbers of spread in schools.

Now though, we look at a problem with taking the data that was collected – even if it was an undercount – and saying it means something different than what it says. This is the misinterpretation of data.

The numbers they did have, measured 15% of identified cases in schools that were traceable to another identified (source) case(s) in schools.

That doesn’t necessarily mean that all the rest (85%) weren’t from spread in schools – but that was the simplified message put out by the report and reported as fact by the media / in headlines.

The report didn’t provide tracing information for all the remaining 85% of identified cases. But it did provide it for some of them: the group of 63 cases that were suspected as ‘source’ cases for the known outbreaks.

(This is what is termed a ‘sample’ in statistical language. It is approximately 1/12 the size of the overall 85%. There isn’t any info provided to say whether this sample is representative of , or has different characteristics – a ‘bias’ – from the overall.)

Here is the relevant excerpt:

Of the 63 cases, they were able to get information about 49 of them, and found 29 to be traceable to a case outside of schools.

29 out of of 49 is just over 60%, and 29 of 63 is slightly under 50%.

If we directly extrapolate from this to the full 85% that weren’t identified as in school-spread, it would be 40% or so traced to a source outside of schools, over 40% with no known origin, and 15% traced to in school spread.

More recent information reported by CBC (April 18, 2021) on overall (not only school) case tracing, stated:

According to OPH, between March 22 and April 5, only 23 per cent of cases were linked to a close contact, while 11 per cent had no known link, and 58 per cent had no information available. Fewer than eight per cent of cases were linked to travel or an outbreak.

That has a higher percentage of cases with ‘no information available’ (58%) than in the report on schools (63-49 = 14, or 21%) – remember that cases were spiking in early April and OPH tweeted midway through that period they could no longer keep up with contact tracing.

But of the cases with known risk factors, that weren’t from outbreaks or travel, the March 22 – April 5 general numbers (a different ‘sample’) had 23% with a link to close contact versus 11% with no known link, which for the cases with known info, is approximately a 2:1 ratio, or more specifically, 68% vs 32%. This compares fairly closely to the schools report ‘sample’ that, of the cases with known info, had 29 (61%) with a link to an infected close contact versus 20 (38%) with no known link to an identified case.

So that gives some confidence that those percentage numbers in the school sample aren’t too distorted for whatever reason.

And, if we ‘extrapolate’ (roughly apply this percentage to the overall 85% of school cases not traced to another in-school case), we get somewhere around 250 school cases with an unknown source of infection, versus 500 that were linked to a known outside-of-school case.

The OPH report doesn’t provide any reasoning for the assumption they made, but they took that whole group of identified school cases that had unidentified sources of infection, and said – for the purposes of their conclusion – that they all must have got infected outside of schools.

If we use the extrapolation numbers, that changes the conclusion from 500 community spread vs 134 school spread (and 250 unknown infection source), to 750 community spread vs 134 school spread. Which is to say, the reported conclusion of 85% vs 15% based on the assumption, becomes 57% vs 15%, with 23% unknown source, when only reporting what is known.

—

Those were the four points I’d looked at before speaking to the Board of Health at their April 19 meeting. And I only spoke to two of the four, in a rushed manner, in the short opportunity I had.

But at the meeting, another member of the public – a representative of child care workers – mentioned something that was then followed up on by the board chair asking Dr. Etches to clarify her/OPH’s position on ‘airborne spread.’

What she said, that it was her / OPH’s position that while airborne transmission was a risk, it was primarily through droplets that COVID is spreading, raises another problem.

(TO BE CONTINUED…)

Interdependent media & in-person learning opportunities for those who are inspired to be part of movements for social justice.

Interdependent media & in-person learning opportunities for those who are inspired to be part of movements for social justice.

Latest comments